eTamu.id – The use of Big Data by companies is increasingly significant. They are all looking for greater amounts of information that allow them to reach the right customer. For this reason, dozens of tools have emerged to handle Big Data, but which one to choose? We help you with a selection of Big Data tools that you should know about.

The 5Vs to define a Big Data tool

There are different tools on the market that will help manage your data. If you want to know which one is best for you, keep in mind the 5V that it must meet to be classified as a powerful tool:

- Volume: Any tool must be able to analyze a large amount of unstructured data (gigabytes and petabytes)

- Speed: This refers to the rate at which data is received and how quickly it is acted upon. There are some tools connected to the internet that allow a real-time view.

- Variety: Accept different data formats (structured and unstructured)

- Veracity: It is useless to have a tool that does not provide us with real information. It is important that the chosen system mitigates data bias, uncovers duplicate data, and detects anomalies or inconsistencies.

- Value: The most important thing with the Big data tool is that the data obtained add value to the activity. This should be defined by the analytical team.

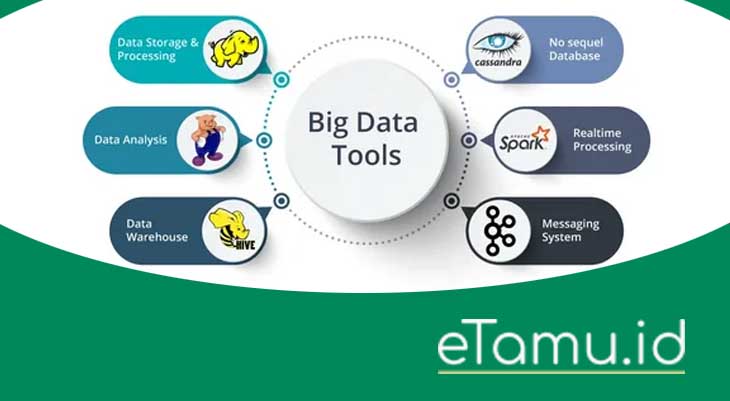

Software for Big Data: essential tools

Data analysis is vital for companies, as it provides very valuable information that allows creating strategies focused on attracting new customers, and also on increasing sales.

But an amount of data as vast as that obtained in these processes is very difficult to analyze if we do not use the appropriate means. Here is a selection of tools that can be useful to handle Big Data:

1. Apache Hadoop

It is the most widely used Big Data tool. In fact, companies like Facebook or The New York Times use it, and it has served as a model for the rest.

Hadoop is a free and open source framework that allows you to process large volumes of data in batches using simple programming models. It is scalable, so you can go from operating on a single server to doing it on multiple ones.

It is a system with a high level of security using HTTP servers that has authorization and compatibility with POSIX-type files, as well as a complete set of properties.

2. elasticsearch

Elasticsearch allows the processing of large amounts of data and see its evolution in real time. In addition, it provides graphics that help to understand the information obtained more easily.

Its main functionality is to index different types of content such as searches in applications and websites, log analytics, infrastructure metrics and performance monitoring, geospatial data visualization, among others.

Once indexed, it is possible to perform complex queries on this data as well as aggregations to retrieve summaries.

One of the advantages of this tool is that it can be expanded with the Elastic Stack, a package of products that increase the capabilities of Elasticsearch. Mozilla and Etsy are some of the companies that have used this Big Data tool.

3. Apache Storm

Apache Storm is an open-source Big Data tool that can be used with any programming language, including JSON-based protocols.

It processes large amounts of data in real time and easily through the creation of macro data topologies to transform and analyze them continuously while information flows constantly enter the system.

4. MongoDB

It is a free NoSQL database (non-relational database) optimized to work with groups of data that vary frequently, or that are semi-structured. It is a distributed database at its core so high availability, scalability, and distribution are already built in.

It is used to store data from mobile applications and content management systems, among others. It is used by companies such as Bosch and Telefónica.

5. ApacheSpark

This is a free and open source tool that connects multiple computers and allows them to process data in parallel. It works through machine learning and other technologies making it an efficient system.

The most notable feature of this Big Data tool is its speed, being 100 times faster than Hadoop. Spark analyzes data in batches and also in real time, and allows the creation of applications in different languages: Java, Python, R and Scala.

6. python

It is one of the most popular and widely used Big Data tools today. The reason has to do with its usability, since it is quite easy to understand compared to other programming languages. Of course, it is necessary to have a basic knowledge of computers to be able to use it.

Python is an interpreted language, which means that it directly executes code line by line. In case of any error, it stops the execution and reports the error that has occurred. It also has a huge library, which allows you to find the necessary functions quickly.

The drawback of this tool is speed, as it is noticeably slower than its competitors.

7. Apache Cassandra

Cassandra is a NoSQL database originally developed by Facebook. It is a very useful storage engine for applications that need to expand massively. It is the best option if you need scalability and high availability without compromising performance. Netflix and Reddit are users of this tool.

8. R-language

R is a programming language and environment focused mainly on statistical analysis, since it is very similar to the mathematical language; although it is also used for Big Data analysis. It has a large user community, so a large number of libraries are available.

R is currently one of the most requested programming languages in the data science job market, making it a very popular big data tool.

9. Apache Drill

Drill is an open-source framework that allows working on large-scale interactive analysis of data sets. It was designed to be able to achieve high scalability in servers and to be able to process petabytes of data and millions of records in a few seconds.

The core of this software is the “Drillbit” service which is responsible for accepting customer requests, processing inquiries and returning information to the customer.

One of the great advantages is that it allows SQL and NoSQL file systems, which allows to obtain a single query result with the union of multiple data stores.

10. RapidMiner

RapidMiner is a Big Data analytics tool that helps with data management, machine learning model development, and model deployment. It comes with a number of plugins that allow you to build custom data mining methods and predictive configuration analysis.

These are the 10 best Big Data tools that we propose, would you recommend others? If so, feel free to leave a comment on the article and give us your suggestions.

If you are interested in knowing more about the world of data, we invite you to be part of our Master in Big Data Online where you will learn how to use it to get to know your client and thus improve your marketing strategy. Don’t wait any longer to sign up!

Related post:

- Content Audit: Definition, Benefits, and How to Do It

- How Big Data Helps Companies Grow Faster! Here is The Fact

- Big Data Marketing: The Role in Developing a Company’s Strategy

- Live Streaming Content Ideas That Marketers Can Use

- Setting Up Live Streaming for a Successful Result

- Target Audience: Definition, Difference from Target Market & Tips

- Cara Melihat History TikTok yang Sudah Ditonton & Menghapusnya - July 27, 2024

- FF Advance Server Apk Free Diamond New Garena Download - July 27, 2024

- Zefoy Likes TikTok Followers, Heart, View, Comment, Share Gratis - July 26, 2024